February 9, 2015 – If Google, Uber and others are going to make self-driving vehicles a reality everywhere then they have to get past one very large hurdle. Google in the last month admitted that its technology cannot handle snow. It appears the radar, computer vision and sensors can be confused or even blinded when the white stuff starts falling. Heavy rain can have a similar deleterious impact. And one other small snag in Google’s autonomous vehicle platform. It has trouble detecting potholes in the road. Can it adjust to the bone-jarring impact of driving into one? What will that do to the on-board sensors?

These challenges go along with other known deficiencies in current autonomous vehicle technology which include:

- an inability to drive in areas that have not been digitally mapped.

- an inability to deal with sudden temporary changes to road conditions like signage related to temporary closures or the appearance of a new stop sign or light not in the vehicle’s database.

- temporary blindness to the vision of an autonomous vehicle when driving directly into the Sun could mean missing a light or sign in the same field of view.

- inability to detect differences between objects spotted on the road surface – is it a rock or a piece of crumpled paper?

- knowing the difference between a pedestrian about to cross a street or someone standing at the side of the road and waving – both appear as motion but only one could actually lead to a collision and fatality.

These weaknesses will require improved sensors, better computer vision, exhaustive and continual digital mapping of all existing roads, faster up and downlinks, and better software.

Autonomous vehicles need a prescribed route today upon which to drive. All current tests and pilot projects are designed to use them this way. And all existing autonomous vehicle projects are limited to traffic only going in one direction. No autonomous vehicle has yet to have to deal with the fast flow of oncoming traffic and having to make a quick left turn (or in the case of drivers in the United Kingdom, a quick right one).

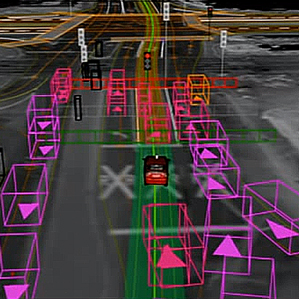

So although futurists see autonomous vehicles impacting the jobs of taxi and bus drivers, we are still a long way from that reality. For the moment the self-driving car is still a product in development as attested by the image below. Here we see how Google’s self-driving car sees moving objects in real time. But it can only see them when laid upon a pre-made map where stationary objects like traffic lights and stop signs are plotted. Change any one of these objects and the autonomous vehicle could be driving blind.

Google’s self-driving car has always been a misleading PR stunt. If it can’t think and be aware of as much of its environment as humans are, it can’t actually drive. The practical self-driving car must await the arrival of sentient AI robots. That’s unlikely before the 2030s, but will almost certainly happen by the 2040s. The same sort of skills needed to drive cars can also drive trains, ocean vessels, airliners, backhoes,

cement trucks, construction cranes, mining equipment, etc., the list is nearly endless. By 2050 half of the human workforce will be unemployed and the other half, mostly institutional professionals, will be under severe competitive pressure from the greater competence and efficiency of sentient robots.

Present Lithium Ion battery technology is far from ideal, but it’s good enough to power universal robots that can produce more robots and more batteries. That is to say robot generations can be self-replicating. Robot brain and sensory system development is the major present technical impediment. The significance of IBM’s recently announced neurosynaptic chip cannot be overstated. In 3-6 years the next generations of this microchip strategy will reach or exceed human brain capability. See: http://research.ibm.com/cognitive-computing/neurosynaptic-chips.shtml#fbid=heTWdJm0uKy

So the timelines will likely develop much as Ray Kurzweil projects.

I agree with you that self-driving vehicles of all types will characterize our world by the mid century. But you know that we already are seeing limited use of AI-driven technology on closed circuits already and in Singapore, an entire city district has launched an autonomous car initiative.

what you describe is simply what happen to a normal driver.

at least with GPS, and dedicated software there is hope to improve it definitively.

for humans, except with hard training, there will always be the same problem…

I remind me the ponny express pretending to be faster than the train

You make an interesting observation. Human drivers are also bad in snow but for very different reasons. It’s not the vision issue. It’s the feel for the road surface. In that sense an AI-vehicle would be far better at negotiating deteriorating surface conditions based on the sensor-package on board. But computer vision needs to be further enhanced to see through snow and heavy rain. And the issue of up-to-date digital map requirements is not something that a human driver absolutely requires to effectively negotiate a drive. A new construction sign may be an irritant to a human behind the wheel, but for an AI-vehicle it is potentially an accident waiting to happen, or a reason for the vehicle to come to a dead stop.