September 17, 2019 – Today’s post marks the 2,800th on this site. In over 9 years I have shared with you the science and technology advancements of interest to me and you, my readers. I thank you for making my voice heard, and for your continued loyalty as subscribers and readers.

Today I have interpreted the words of Peter Diamandis, in this his third contribution in a series on the subject of augmented reality (AR) which looks at converging technologies in the world of Web 3.0. I apologize for the length which I have considerably pared down and edited with the hope that you find the information of value. As always I welcome your comments.

How each of us sees the world is about to change dramatically. For all of our human history, looking at the world was roughly the same for all of us. But now the boundaries between the digital and physical world are beginning to fade. Today we have a world layered with digitized overlaid information that is rich, meaningful, and interactive. As a result, our experiences in perceiving the environment around us are becoming vastly different, personalized to goals, dreams, and desires.

Welcome to Web 3.0, also known as The Spatial Web. Web 1.0 featured static pages and read-only interactions limited to one-way exchanges. Web 2.0 provided multimedia content, interactive web pages, and participatory social media, all of this mediated by 2D screens. But today we are witnessing the rise of Web 3.0, riding the convergence of high-bandwidth 5G connectivity, rapidly evolving AR eyewear, an emerging trillion-sensor economy, and ultra-powerful artificial intelligence (AI). The result is we will soon be able to superimpose digital information atop any physical surrounding, freeing our eyes from the tyranny of the screen, immersing us in smart environments, and making our world endlessly dynamic.

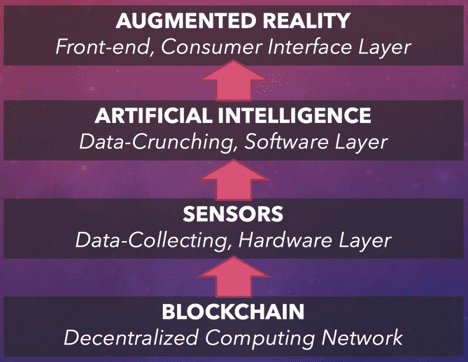

Let us explore this world further by looking at how all of these technologies will lead to an amazing convergence among AR, AI, sensors, blockchain, and other innovative technologies that are the world of Web 3.0.

A Tale of Convergence

Let’s deconstruct everything beneath the sleek AR display. It begins with the Graphics Processing Unit (GPU), an electric circuit that performs rapid calculations to render images. GPUs today are found in mobile phones, game consoles, and computers. Because AR requires significant computing power, a single GPU does not suffice. Instead, using blockchain we can enable distributed GPU processing power. As a result, blockchains specifically dedicated to AR holographic processing are on the rise.

Other features include:

- cameras and sensors to aggregate real-time data from any environment and seamlessly integrate the physical and virtual.

- Body-tracking sensors to critically align a user’s self-rendering in AR within a virtually enhanced environment.

- Depth sensors to provide data for 3D spatial maps.

- Cameras to absorb more surface-level, detailed visual input

- Sensors to collect biometric data, such as heart rate and brain activity, to incorporate health-related feedback to AR interfaces.

- AI to process enormous volumes of data instantaneously with embedded algorithms to enhance customized AR experiences including everything from artistic virtual overlays to personalized dietary annotations.

AI in retail AR applications will use your purchasing history, current closet inventory, and possibly even mood indicators to display digitally rendered items most suitable for your wardrobe, tailored to your measurements.

In healthcare, AI built into AR glasses will provide physicians with immediately accessible and maximally relevant information parsed from a patient’s medical record and the current state of research relevant to him or her, to aid in accurate diagnoses and treatments, freeing doctors to engage in improving bed-side manners and the education of patients.

Convergence in Manufacturing

How will AR and the Web 3.0 world impact manufacturing?

Looking at large producers, it appears highly likely that over the next ten years, AR along with AI, sensors, and blockchain will multiply manufacturer productivity and vastly improve employee experiences.

(1) Convergence with AI

In the initial application, digital guides superimposed on production tables can vastly improve employee accuracy and speed, while minimizing error rates.

Already, the International Air Transport Association (IATA), responsible for 82% of global air travel today, have implemented the use of Atheer AR headsets in cargo management reporting a 30% improvement in cargo handling speed and no less than a 90% reduction in errors.

A similar success is occurring at Boeing which has brought Skylight AR glasses to the production line with the company experiencing a 25% drop in the time needed to produce an aircraft and near-zero error rates.

Beyond air travel, and aircraft manufacturing, smart AR headsets can be used for on-the-job training without reducing the productivity of other workers. Land Rover, for instance, has implemented Bosch’s Re’flekt One AR solution to give its technicians the vision to see the inside of Range Rover Sport vehicle without removing the dashboard.

And AI will soon provide go-to expertise to workers on other assembly lines providing instant guidance and real-time feedback to reduce production downtime, boost overall output, and even assist customers at home in do-it-yourself assembly (think IKEA).

AR guidance to mitigate supply chain inefficiencies is perhaps one of the most profitable business opportunities for implementing AI and AR together. Coordinating moving parts, eliminating the need for manned scanners at checkpoints, and directing traffic within warehouses, AR systems with AI should vastly improve workflow and quality assurance. A case example is DHL which initially implemented AR “vision picking” back in 2015. Now the company has expanded the scope using Google’s newest smart lens in warehouses across the planet and has noted a 15% jump in productivity. The DHL investment amounts to $300 million USD.

Ash Eldritch, CEO of Vital Enterprises, describes the convergence of these technologies and their ultimate impact stating, “All these…coming together around artificial intelligence are going to augment the capabilities of the worker and that’s very powerful. I call it Augmented Intelligence. The idea is that you can take someone of a certain skill level and by augmenting them with artificial intelligence via augmented reality and the Internet of Things…elevate the skill level of that worker.”

Eldritch’s point is well made by companies like Goodyear, ThyssenKrupp, and Johnson Controls who have adopted Microsoft HoloLens 2 in their manufacturing and design operations to elevate workers’ skills. What is most heartening in all of this is that AI isn’t taking away human jobs, but rather through an AR interface and collaboration, enhancing them.

(2) Convergence with Sensors

On the hardware front, AI-enhanced AR systems will see a mass proliferation of sensors which will be used to detect what’s happening in the environment and through the application of computer vision be able to contribute to decision-making.

The current technologies used to sense the environment measure depth using scanning sensors to project a structured pattern of infrared light dots onto a scene, and generating 3D maps from the input. Or technology using stereoscopic imaging deploying two lenses produces equally accurate measurements.

But there is a new method being used by Microsoft’s HoloLens 2 and Intel’s RealSense 400-series camera, a process called “phased time-of-flight” (ToF). How does it work? The HoloLens 2 is equipped with numerous lasers, each with 100 milliwatts (mW) of power that fire in quick bursts. A wearer can automatically measure the distance between nearby objects because the headset callibrates the amount of light coming from the return laser beam which experiences a slight phase shift from the original signal. The phase difference creates an accurate reconstruction of what lies in front of the AR headset wearer. The advantage of phased ToF sensors is they are silicon-based, cheap to produce, and require far less computing overhead.

Other features in the HoloLens 2 include a built-in accelerometer, gyroscope, and magnetometer which can be applied to inertial measurement typically used by navigation systems in airplanes and spacecraft. With its four “environment understanding cameras” HoloLens 2 can track head movements while its 2.4 MegaPixel high definition video camera and ambient light sensor produce advanced computer vision. This is pretty neat stuff!

But Microsoft isn’t the only one pushing the technology envelope for digital displays. Nvidia is working on Foveated AR Display which brings the primary foveal area of the eye, the part of the retina that permits 100% visual acuity, letting peripheral regions fall into softer background images which mimicks natural visual perception.

Then there are gaze tracking sensors that grant users control over their screens without touching or hand gestures. Through simple visual cues such as staring at an object for more than three seconds, the technology can activate commands.

In other applications and industry niches, stacked convergence of blockchain, sensors, AI and AR will prove to be highly disruptive. A case in point is the field of healthcare where biometric sensors will soon give users a customized AR experience. Already, MIT Media Lab’s Deep Reality group has created an underwater VR relaxation experience that responds to real-time brain activity detected by a modified version of the Muse EEG. The experience even adapts to users’ biometric data, from heart rate to electro-dermal activity which is picked up through an Empatica E4 wristband.

Sensors converging with AR will improve physical-digital surface integration, intuitive hand-and-eye controls, and increasingly personalize the user’s augmented world. Companies to watch include MicroVision, making tremendous leaps in sensor technology.

(3) Convergence with Blockchain

Because AR requires much more compute power than the typical 2D experience, centralized GPUs and cloud computing systems are needed to provide the necessary infrastructure. The workload requirements are taxing and that’s where blockchain may prove a good solution.

Otoy is a company aiming to create the largest distributed GPU network in the world which it calls the Render Network or RNDR. It has been built specifically on the Ethereum blockchain for holographic media and is currently being Beta tested. This network could revolutionize AR deployment accessibility. One of Otoy’s investors is Eric Schmidt, former Chairman of Alphabet. Schmidt states “I predicted that 90% of computing would eventually reside in the web-based cloud… Otoy has created a remarkable technology which moves that last 10%—high-end graphics processing—entirely to the cloud. This is a disruptive and important achievement. In my view, it marks the tipping point where the web replaces the PC as the dominant computing platform of the future.”

RNDR allows anyone with a GPU to contribute their power to its network earning a commission of up to $300 a month in RNDR tokens, a cryptocurrency. These can be redeemed in cash or used to create AR content. In this way Otoy’s blockchain network allows designers to profit when not using their GPUs, and further democratizes the experience for newer members to enter the field. Beyond these networks’ power suppliers, distributing GPU processing power will allow manufacturing companies to access AR design tools and customize learning experiences. In this way deploying AR using blockchain will boost usage and deployment across many more industries.

For consumers, a new startup Scanetchain is also entering the blockchain-AR space to allow users to scan items using a smartphone. The app then provides access to a trove of information from the manufacturer including price, origin and shipping details. Based on NEM, a peer-to-peer cryptocurrency that implements a blockchain consensus algorithm, the Scanetchain app aims to make information far more accessible and, in the process, create a social network of purchasers. Users earn tokens through watching ads, and all transactions are hashed into blocks and securely recorded. For brick-and-mortar stores, the writing may be on the wall, as blockchain becomes the glue for cloud-based retailers.

Final Thoughts

Integrating AI into AR creates an “auto-magical” manufacturing pipeline that will fundamentally transform our industries, cutting down on marginal costs, reducing inefficiencies and waste, and maximizing employee productivity. Bolstering this will be sensor technology which is already blurring the boundaries between our augmented and the physical world. And while intuitive hand and eye motions will dictate commands using hands-free interfaces, biometric data is poised to customize each of our AR experiences to be far more in touch with our mental and physical health. And all of this will be underpinned by distributed computing power with blockchain networks.

This is the promise of AR in the Web 3.0 world. Whether in retail, manufacturing, entertainment, or elsewhere, the stacked convergence described above will receive significant investment in the next decade. The evidence is already present with 52 Fortune 500 companies beginning to test and deploy AR/VR technology. And while global revenue from AR/VR stood at $5.2 billion USD in 2016, IDC, the market intelligence firm, predicts that number will grow to $162 billion in value by next year.