July 15, 2017 – Computer scientists at Brown University, in Providence, Rhode Island, have developed new software that makes it easier for robots to understand human requests. The research led by two undergraduates at the time Dilip Arumugam (now a graduate student) and Siddharth Karamcheti, focused on natural language commands and how robots interpret them. Arumugam states, “the issue….is language grounding….The problem is that commands can have different levels of abstraction, and that can cause a robot to plan its actions inefficiently or fail to complete the task at all.”

Arumugam gives the following examples.

A warehouse worker shares a workload with a robot forklift that is next to him or her. When the worker says “grab that pallet,” how should the robot interpret this? Which pallet for example…the one in front of me, the one to the right, or one across the floor? In addition, “grab that pallet” isn’t the same as “lift that pallet” which may be implied by the worker but not implicitly understood by the robot. A robot receiving such a request may simply do nothing without further instruction.

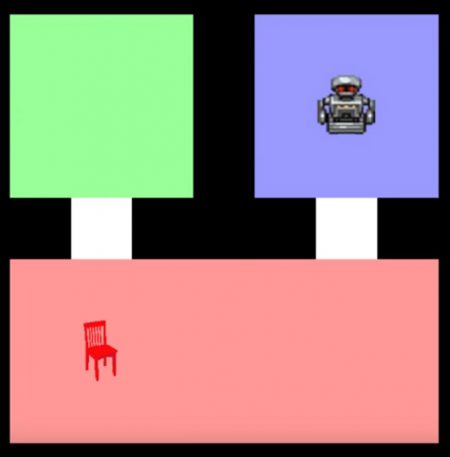

So Arumugam and Karmacheti devised a software program that analyzes the language of inference within the robots spatial reality and gives it the knowledge of abstraction when executing a task. They then ran a virtual test by getting volunteers to instruct the robot software to move a chair image from a virtual red room to a virtual blue room. Instructions varied from a high-level command “take the chair to the blue room,” versus step-by-step instructions such as “take five steps north, turn right, take two more steps, get the chair, turn left, turn left, take five steps south.” In the virtual environment, through continuous testing, the software learned how to apply inference to an abstract spoken request.

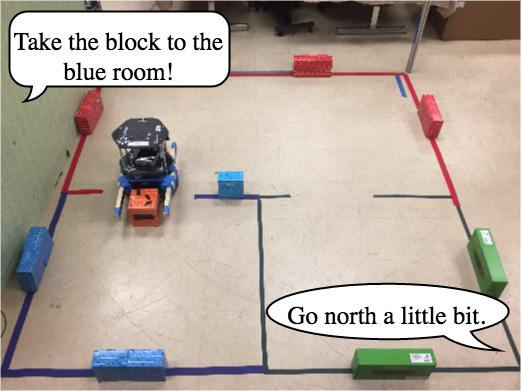

The two researchers then decided to use the software with a Roomba-like robot in the real world. When the robot inferred the task objective and was then given specific additional instructions, it responded 90% of the time to the spoken command within 2 seconds. When no specificity was added it took the robot 20 seconds or more to execute on the abstraction.

States Stefanie Tellex, Professor, Computer Science at Brown, “This work is a step toward the goal of enabling people to communicate with robots in much the same way that we communicate with each other.”