March 7, 2018 – The drone armies of Star Wars episodes may turn from fiction to fact. In a recent issue of The Economist, a special report on the future of war describes the rise of battlefield robots capable of making choices about killing humans independently of those who deploy them. Nations like Germany are pushing for a ban on such weapons, and an international campaign to stop countries from building and deploying them is underway.

Despite that the research and testing of these weapons is underway in the United States, China, Russia, and elsewhere, a new arms race to deliver what Duke Robotics, a developer of the Octocopter, a drone mounted with semi-automatic guns and 40-millimeter grenades, describes as a war with “no boots on the ground.” Duke, a fairly recent startup, is in the process of raising $15 million US to grow the company and states it has received an endorsement from Israel’s Ministry of Defense.

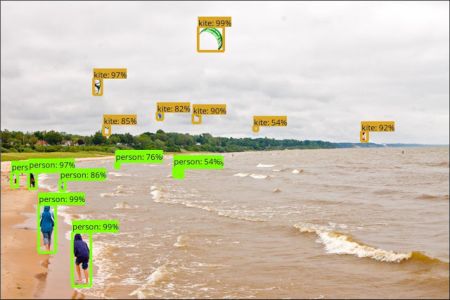

Recently, Google has given the United States Department of Defense (DoD) assistance in analyzing images from drone surveillance. The tool, TensorFlow, introduced in 2015, is open source software focused on machine learning, a form of artificial intelligence (AI) that Google is using for its Translate mobile application. For this benign purpose, TensorFlow instantly translates printed pages with foreign languages by pointing a smartphone camera at them. Google is also using the technology to improve speech recognition accuracy for its “OK Google” mobile and “Hey Google” home appliance technologies. And third parties such as AXA, a large insurer, uses TensorFlow to predict the incidence of high-cost traffic accidents, while other insurers are applying the technology for healthcare coverage.

But the dark side of any technology including AI is the ability to use it in war. So far DoD has made statements that its TensorFlow use is focused on the integration of large amounts of data for harvesting new intelligence. The Pentagon’s application built using the tool is designed to automatically analyze drone footage to provide details not easily detected by human observers. Field deployment has been in Syria and Iraq where drone footage from surveillance of the self-proclaimed caliphate, the Islamic State is being analyzed. Sorting out and identifying objects is the current mission. But it isn’t a leap to consider that such a technology could become one that picks and directs drones to target those objects.

The Pentagon has called its recent venture into AI, machine learning, and autonomous machines for use in and around the battlefield, the Third Offset Strategy. In its military research and procurement, the hoped-for end result will be AI tools and technology capable of dealing with anticipated conflicts that occur over the next 20 years and beyond.

Russia and China are no stranger to the disruptive nature of the new technology on military strategy. The nuclear balance which has restrained the Great Powers on our planet from major confrontations is now threatened by the rise of autonomous weapons and AI. The way we think of war and the way it will be fought as the new technology enters military arsenals presents a moral dilemma. Generals and their soldiers have mixed opinions about using these systems and weaponry. In July of 2017, the second highest-ranking member of the U.S. military, General Paul Selva stated that the military needs we to keep “the ethical rules of war in place lest we unleash on humanity a set of robots that we don’t know how to control.” He further stated, “I don’t think it’s reasonable for us to put robots in charge of whether or not we take a human life.”

Scientist Stephen Hawking, SpaceX’s Elon Musk, and others have argued emphatically against developing autonomous weapons calling for a universal ban. In addition 65 human rights organizations in 28 countries have warned against the military acquiring weapons driven by AI and autonomous weapons that could end up independently deciding to target and kill humans. A global campaign founded in 2012 states on its website, “Giving machines the power to decide who lives and dies on the battlefield is an unacceptable application of technology. Human control of any combat robot is essential to ensuring both humanitarian protection and effective legal control.” At a security conference this week Germany restated its position to not procure any form of autonomous systems for military purposes. The German military, however, intends to be able to defend itself against such weapons if used by other countries. But should non-proliferation of AI and autonomous weapons fail as badly as it has for nuclear weapons, what will a military using this technology look like on battlefields of the future?

War will speed up with autonomous robots and AI making battlefield tactical decisions. Humans will struggle to keep up with the speed of this new form of warfare. A smart weapon will be sensor-laden, with AI onboard, and an ability to adjust strategy as battlefield conditions change. Decisions will be carried out at light speed. Autonomous machines will be in the sky, on land and underwater. They will be far cheaper to build and operate than a stealth bomber, an Abrams tank, or a nuclear submarine. To borrow a Peter Diamandis term, warfare will be demonetized in this new form of warfare. Surveillance in real-time of the entire battlefield will be ubiquitous with drones ranging from nano to copter-size. For humans deployed to the battlefield whether in the air, on land, or on the sea, an army of robots will provide intelligence and total logistical support. Robotic systems working in swarms will envelop enemy positions while robot defenders will attempt to disrupt their communications or take them out by other means.

In the end, the greatest fear is that machine intelligence built into armed robots could lead to an existential crisis for humanity, turning on their creators. Or maybe intelligent robots and AI systems designed for war will consider their human creators and decide that fighting to the death is “inhumane and un-machinelike” and simply will stop doing the bidding of humanity, ending the carnage.